Introducing Open Source by Pieces

Recap our latest live stream covering our initial Open Source by Pieces initiative, how you can get involved, and steps to contribute to our first SDK.

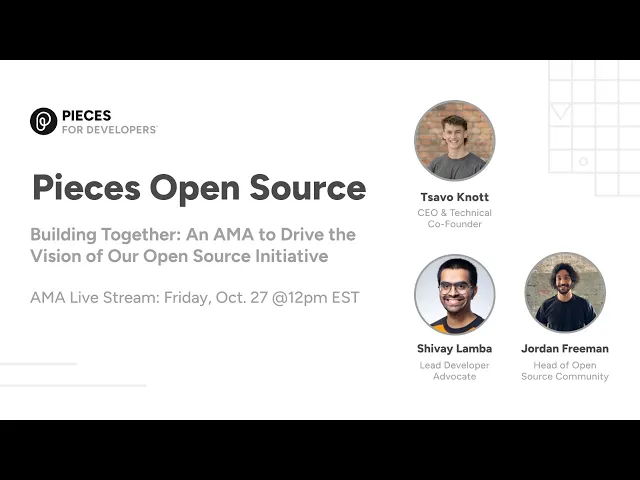

In our latest Ask Me Anything (AMA) session, we announced the launch of our open source initiative at Pieces. The panel, consisting of Tsavo Knott (CEO), Jordan Freeman (Head of Open Source), and Shivay Lamba (Lead Developer Advocate), shared their insights on the importance of open source contributions, the release of the Pieces TypeScript SDK, and answered questions from our community. The AMA also touched upon the future of Pieces' open-source projects and community involvement.

The main focus of this livestream was on the release of the Pieces TypeScript SDK, the importance of community involvement in open source projects, and the potential of Pieces OS. This session is a must-watch for anyone interested in understanding how these advanced features can augment their existing workflow and enhance productivity.

If you missed the live event, don't worry. We've summarized the key takeaways, highlighted some of the most thought-provoking questions and answers, and even shared video snippets of the discussion to get you up to speed!

Topics Covered

(1:15) Introducing Open Source by Pieces

(6:17) Getting Started with @pieces.app/client and testing the NPM package

(8:39) Language SDKs

(10:19) npm Documentation

(15:26) Starter Project Demo

(47:38) How to join and contribute to the Pieces Open Source Community

(55:46) Potential of building with Pieces OS + Open Source Community

https://www.youtube.com/watch?v=9BmGPGsB3n4

Our History and Vision with Open Source

Open Source has long since been the backbone of the internet, and here at Pieces we felt like it is finally our time to support, contribute, and help nurture the open source community. Knowing the powerful workflow tools that we have created - coupled with the responses by our users - let us know that we truly had something to offer developers just like ourselves working on other projects around the world.

The AMA discussion opened with Tsavo expressing the team’s excitement about discussing open source initiatives at Pieces. He highlighted their commitment to building products that enhance developer productivity and express their eagerness to work with the community.

Tsavo discussed how Pieces has been considering open source for a long time, starting with the use of the OpenAPI specification. He mentioned that most of the code inside Pieces is generated from OpenAPI and gRPC ProtoBuf specs, which allows for easy conversion into client-side SDKs and core packages.

Tsavo also announced the plan to open source some of our integrations and enable the community to integrate with Pieces directly. He acknowledged that the Pieces team can't build everything and since developers use a variety of tools, we aim to ease into the open source community and user base for building additional tooling/projects around Pieces.

Tsavo also discussed the potential of client-side SDKs to pull out, access, organize, and format data from Pieces. Thus having two major pillars of using client-side SDKs with Pieces: the ability to save, access, search, and reuse data, and the ability to build co-pilots and workflow agents on top of Pieces OS using its AI capabilities.

While we currently only support a single NPM package, we are looking forward to the coming weeks as we add to that list with languages like dart, kotlin, and many more. After reading this article you will have a clear picture of what we currently support, what’s coming in the future, how to get involved with the Open Source by Pieces community, along with a starter project you can build, along with details around our @pieces.app/client package that we just released!

Getting Started with @pieces.app/client and testing the NPM package

Hello there - this is a brief walkthrough of what was introduced during the AMA with some of our initial configurations. Our repo here will continually be updated as the project progresses with the community.

Follow along with this initial walkthrough to start exploring some of the data you have access to use when interacting with the package.

Configuration & Setup with NPM

Creating the base of your project

Let’s get started with the base of your new React project where we will learn about manipulating Pieces OS and creating our own assets locally on device.

Follow this guide here to check your node and npm versions before getting started. Download the appropriate updates or install npm to have success with this walkthrough.

Run this command in the directory of your choice to initialize your react application:

Navigate to your .tsconfig folder and insure you have the following settings:How your .tsconfig should look when you are done:

Installing with npm

First install both typescript and ts-node through npm to support some scripts, building the application and a few other things:

Then getting the types package is super beneficial and in some cases needed to work in this environment:

Install a few react libraries to get a more visual experience while learning about the api itself and support the browser..

Here are those npm installations for @types:

Along with the typing, you will need to install the full packages for react, react-dom, and react scripts to properly get started in this project.

Don’t forget to install the @pieces.app/client npm package:

And last but not least its a good idea to add a few scripts into your package.json to help with development:

Setting up your public Directory

Next you can go ahead and create a new directory called public (if it is not already created) that will hold your index.html file where your entry point exists. Create the file inside of your public directory and save it there. You do not have to add anything to the index.html file at this time, as we will come back to this later. If you would like, you can add the following as a placeholder for now.

If there are other elements here you can remove them and replace them with the following:

Setting up your src Directory

Now that the initial .html file has been created, you can start to work on your src directory and get the rest of your core files added to the project.

Inside of the src directory, add two files:

index.tsx- where the core info is and where we are going to be spending most of the time during this project following the setup.

Once you open index.tsx you should follow these steps to get your base Application window created:

1. Import the full react library at the top of your file, along with a single import from react-dom:

2. Follow that with the full App() function and main run of the application:

3. And then add these two lines to target the root element by using the ID that is on it:

4. Any finally get your render in to your file:

When working in this environment I noticed some @babel errors during the build process (or running npm run start) and found this workaround that you can install via npm as well here.

Running your Project for the First Time

Everything has been added. We are nearly there and will need to perform a final few checks before starting our dev project.

1. Be sure that Pieces OS is running

2. Double check that the port is localhost:1000

3. (optional) Run another npm install (because it never hurts)

Now that everything is ready to go, lets run this command here:

And after a few seconds you should be able to see in your chrome browser (or your primary browser) a blank window that looks like this:

You have now successfully set up your dev environment, and will be ready to test different endpoints inside of Pieces OS.

Connecting your Application

When Pieces OS is running in the background of your machine, it is communicating with other local applications that use Pieces software, and up until recently only supporting internally built tools.

As each plugin, extension, or application initializes they 'reach out' to Pieces OS and authenticate with the application themselves. There are a number of application formats that we support and provide for each of our applications. When developing on Pieces OS, you can use "BRAVE" to avoid any issues with other applications.

Creating Application

The application model describes what application a format or analytics event originated from. This is passed along when initializing your dev environment and creates a connection to Pieces OS.

To create the Application object for your project, you will need to make sure that you have the following three things:

1. Create a tracked_application json object to hold your post request data

2. Define your url for the /connect function

3. Output using console.log() following your connect() method is complete

tracked_application

Connecting your application here is as easy as a single POST request and can be done via the Response interface of the Fetch Api. Remember that you can name this whatever you would like to, just be sure to include the updated variable name in the options down below.

When creating the tracked_application item, you will need to use a type that is not available inside of the current npm_deployment.

This structure is the same as the tracked_application full example you see here below, and the only difference between the unavailable type SeededTrackedApplication and the available type TrackedApplication is id: number.

First let’s take a look at the tracked_application object:

name: Brave

platform: Depending on your current environment, you need to set the platform parameter to match your current operating system. Select between

.Macos,.Windows,.LinuxBe sure to double check that you have the following import added to the first few lines of your

index.tsxfile if you have not already:import * as Pieces from "@pieces-app/client";

Creating connect() Function

When your program starts, it needs to connect to Pieces OS to gain access to any functional data and to exchange information on the localhost:1000 route. Now that you have your tracked_application - let’s get into the details.

Start by defining your connect function and add the initial /connect route to your function as the url variable and then attach the options object. Include your tracked_application object passed into a JSON.stringify() method under the application parameter like so:

In the future we will be providing a cleaner API for connecting your application and registering it with Pieces OS. This POST request will suffice for now.

Now let’s add few more things to this file:

_flag: Boolean - for marking the success or failure of the try catch

try, catch

response - for capturing the fetch response back from OS Server

data - for storing the data and logging it

e - error that is coming back if the response fails

Running connect().then():

This will just run the connect function and then log the response in your console, inside of your browser:

Below this final line should be:

function App() ...const rootElement ...root.render(...)

Here is the entire file for you to double check your work:

View Console Output in your Browser

Now that everything has been correctly configured (fingers crossed) you can run your sample application and connect to Pieces OS for the first time.

Inside of your terminal at the root directory of your project, use NPM to run one of the scripts that we added to the package.json file called "start":

And you should have the same content in the main browser window as before once this completes. But if you open up your inspector using cmd+option+i or ctrl+shift+c you will see this inside of your console:

This includes both the full OS response object with all of the data that you will need to get going with other endpoints, and your application is now connected and ready to go for the rest of your exploration and discovery!

Follow along with these next steps to learn about assets and formats, two things that are very important for managing any form of data with Pieces OS.

Getting Started with Asset + /assets

Asset is a very important model whose primary purpose is to manage the seeded data that comes into the application, and is stored inside of Pieces OS. Each asset is a identifiable piece of saved data, or pre-seeded data.

/Assets is equally important, but instead of containing a single asset with parameters storing data on it, Assets serves as the list of type: Asset objects that are stored there. Also you will find the operations for adding, deleting, searching, and other functions that are related to referencing a number of different snippets to make a comparison. For instance:

If I want to create a snippet (lets call it var), I need to send this to the master Assets list, you would first create var itself with the proper formats and data added to the var object, then send the newly created SeededAsset over to the Assets list (assets/create). Then you will receive the full asset back as the response from the server. Cool, right?

Traditionally, Assets is a linear list of flat Asset objects stored in an array or list.

You can use identifiers to get a specific asset from the asset list called a UUID. But you'll learn more about that later on.

/asset

Initially when creating your application, you will have no snippets saved to your project, will not be signed in, and you will have not completed onboarding. These are properties that you may see during this creation.

Check out

localhost:1000/assetswhile Pieces OS is running to see the empty object that is there.

Creating your First Asset

While creating an asset, there are some required parameters that you will need to be sure to include the proper format data.

For each

Assetobject, each required parameter must be included, and the Asset must be seeded before it is sent to be created.

SeededAsset

This seed data will become an asset. You can use this structure to provide data to Pieces OS, and will include fewer parameters than what you will get back in your response. Let's get started with the seeded asset formatting before we pass this over to /Assets.

At the top level of this object you will see:

schemametadataapplication(required)format(required)discoveredavailable

Schema, metadata, discovered and available are all parameters that have extensive use cases, but for now we are going to focus on application & format - the two required formats for this object.

With each call you need to include your application object that you created earlier - and we can do this inside of the .then() following the return from connect() which is defined here:

/assets/create

Now before continuing forward, we will need to prepare the create() function to connect to the proper /assets/create endpoint. Create slightly differs from connect, since previously our json object did not require any new data that was returned back from the server. In this case we will need to include the application data that was returned back from our initial call to /connect.

The create() function needs to accomplish a few things:

1. Create a new asset using our simple SeededAsset configuration that we just created as the seed object

2. Send a POST request to the new http://localhost:1000/assets/create without data

3. Return the response back after this is completed

Here is what the create() function looks like in its entirety:

To give you more of a true API experience with what is to come, here is what the create API endpoint loops like if you were to pass the _seed into it like so:

Now that we have the create function created, all that is left is to call create() and log our new asset to the console!

You can add this final call to the end of the connect.then():

Response

Once you receive your response back from Pieces OS, you will notice the drastic difference in the response back here. There is quite a long list of parameters that you can store alongside your assets to make them more powerful.

The response back will look similar to the following snippet that you can see on a sharable link created from Pieces for Developers Desktop Application:

https://jwaf.pieces.cloud/?p=f0114f8b8e

View Your Data

Now when you follow this guide, you will be receiving this data back from inside of your console in the browser. But if you would like to view your data incrementally through the full browser window, you can navigate to http://localhost:1000/assets to view a full list of snippets that have been saved.

We use JSON Viewer internally when developing and recommend using some form of web based extension that assists with reading JSON Data.

Concluding our SDK First-Look

This is a very simple guide on how to get up and running using the @pieces.app/client npm package and create a web environment that you can build on top of. Fork this repo to get started and learn about the depth of possibilities you have with Pieces OS.

More guides will be coming soon around:

Using Pieces OS as a database

Creating a personal Copilot that understands your context

Learning about `/search` endpoints

...more!

How to join and contribute to the Pieces Open Source Community

Next Shivay, lead developer advocate at Pieces, emphasized the importance of community involvement for driving open source adoption for Pieces and how can the folks from the community be involved:

Open-Source Channel on Discord: We now have a dedicated open-source channel within the Pieces Discord server. This channel will serve as a platform for discussions around open-source projects, upcoming plugins, and SDKs for Pieces.

Community Managers: We have users like Sophyia (.sophyia), Mason and Shivay on the Discord server who will assist with onboarding and answer questions about open-source initiatives.

Contributions to Open-Source Projects: We encourage community members to contribute to the open-source projects, such as the newly announced TypeScript SDK. The Pieces team will be present to guide community members in working on open issues and accepting their suggestions about various open source projects, SDKs etc.

Regular Community Meetings: We will conduct regular community meetings on Discord to introduce new members, discuss ongoing projects and issues, and answer questions. These meetings will also serve as a platform for community members to interact directly with the Pieces staff.

Release of SDKs: We are planning to release a lot of SDKs soon for use by the community.

Open-Sourcing Plugins: We have some plans to open-source several Pieces plugins that developers love to use, and we welcome contributions to these open-source plugins.

Sponsorship of Open-Source Projects: We will in future alo explore the possibility of sponsoring certain open-source projects around Pieces being built and driven by the community.

https://youtu.be/fj6BYv8eoxc//youtu.be/1rU_rwUfdMw

Potential of building with Pieces OS + Open Source Community

As we approached the end of our session, we wanted to discuss the potential applications of Pieces OS and its built-in functionality. Jordan highlighted how developers can make interesting abstractions with just a small part of Pieces OS and integrate it into their projects, potentially enhancing their workflow and application tenfold.

Tsavo discussed the possibility of building web applications like extensions etc powered by Pieces OS. How, with a simple HTTP or WebSocket request, developers can call Pieces OS to run large language models on various platforms, including MacOS, Linux, and Windows.

Jordan also touched on the topic of starter projects, which will evolve from being just example projects to fully configured web environments. These environments will allow developers to immediately start interacting with the Pieces Copilot or save some code from somewhere and learn more about it.

We again emphasized the importance of community involvement in open source development. We encourage developers to join community meetings, follow updates, and build relationships within the community.

Finally, we expressed our excitement about the progress made so far, including the generation of the TypeScript SDK in just 24 hours including plans to open source more SDKs in the near future and encourage everyone to join the Discord, be active in the community, and start building cool stuff together.

Conclusion

We'd like to extend our heartfelt thanks to everyone who participated in our discussion about the open source initiatives at Pieces.

Our team at Pieces is committed to fostering a vibrant open source community and creating powerful tools that can assist developers worldwide.

We're particularly excited about the potential of our open source projects, including the newly announced TypeScript SDK, to enhance the functionality of Pieces and provide a platform for developers to contribute and learn. The ability to collaborate and build on these projects is a significant step forward, and we're thrilled to be part of this journey.

We're also eagerly anticipating the release of more SDKs and the growth of our open source community. The discussions and plans we've shared today are just the beginning, and we hope you share our enthusiasm. Keep an eye out for announcements about our next AMA; we're excited to delve deeper into some additional features in Pieces and expand our open source initiatives.

Whether you're a current user with feedback, a developer interested in contributing to our open source projects, or someone just curious about the technology, we invite you to join our next session and become part of our vibrant community on Discord!

Once again, thank you for your invaluable participation and for being a part of our community. Your feedback and engagement are what drive us to continue innovating. Until the next AMA, happy coding!