Privacy Policy

Last updated on November 6th, 2024

Mesh Intelligent Technologies, Inc.’s Pieces (“Pieces”, “we”) knows that you care about how your personal information is used and shared, and Pieces takes your privacy seriously. In this Privacy Policy, we describe how we collect, use, and handle your personal information when you use our services, software, technology, and websites (“Services”). This Privacy Policy applies when Pieces is the data controller with regard to the collected personal information referenced in this Privacy Policy. Pieces’s address is:

Mesh Intelligent Technologies, Inc., 1311 Vine St., Cincinnati, OH 45202

Types of Information We Collect

We collect and use the following information to provide, improve, protect, and promote our Services.

Account Information. We collect, and associate with your account, the information you provide to us when you do things such as create your account and upgrade to a Pieces account that includes paid features (“Paid Account”).

Your Content. Our Services are designed as a simple and personalized way for you to store your code, files, data, content, and other information (“Your Content”), collaborate with others, and work across multiple devices and services. To provide you with the Services, we store, process, and may transmit Your Content (if you choose to sync Your Content), as well as information related to it. This related information includes your profile information, file names, file sizes, uploaded times, what application a file came from, identity of collaborators, usage activity, and other information.

Usage Information. We collect information related to how you use and interact with the Services, including actions you take in your account, such as adding or viewing a Piece or searching for a Piece. We also may collect information about the pages you view, the referring site, your IP address and session information, and the date and time of each request. This is information we collect from every user of the Services, whether they have an account or not.

Device Information. We also collect information from and about the devices you use to access the Services. This includes things like IP addresses and the type of browser and device you use. Your devices (depending on their settings) may also transmit location information to the Services.

Cookies and Other Technologies. We may use technologies like cookies and pixel tags on our marketing website. Cookies may function to enable us to understand how you interact with our Services and improve them based on that information, compile statistical reports, and provide information for future development. By using our Services, you agree that we can place these types of cookies on your computer or device. You can set your browser to not accept cookies, but this may limit your ability to use the Services.

Do Not Track. Do Not Track is a privacy preference that users can set in their web browsers. When a user turns on the Do Not Track signal, the browser sends a message to websites requesting them not to track the user. At this time, we do not respond to Do Not Track browser settings or signals. For information about Do Not Track, please visit: www.allaboutdnt.com

How We Use Your Information

We may use your information for the following purposes:

To present our Services and their contents to you.

To provide you with information, products, or services that you request from us, and respond to support requests.

To provide you with notices about your Pieces account.

To carry out our obligations and enforce our rights arising from any contracts entered into between you and us, including for billing and collection.

To notify you about upgrades to the Services.

To notify you about changes to Pieces or any products or services we offer or provide.

For the purpose of aggregated statistical analysis of how you and others use our Services.

For developing and marketing our Services and other services.

For the management of our relationships and the administration of our Services and business.

For any other legal purpose with your consent.

As necessary for security purposes or to investigate possible fraud or attempts to harm Pieces or our users.

To comply with our legal obligations, protect our intellectual property, and enforce our Terms of Service.

To fulfill any other purpose for which you provide your information.

In any other way we may describe when you provide the information.

For any other legal purpose with your consent.

We may also use your personal information to contact you about our goods and services that we believe may be of interest to you. If you do not want us to use your information in this way, please send us an email at privacy@pieces.app.

Legal Bases for Processing your Personal Information

To the extent that our processing of your personal information is subject to international laws (including, but not limited to, the European Union's General Data Protection Regulation), Pieces is required to notify you about the legal basis on which we process personal information. Pieces processes your personal information on the following legal bases:

When you create a Pieces account, you provide us with certain personal information in order to enter into the Terms of Service agreement with us, and we process that information on the basis of performing that contract. If you have a Paid Account with us, we collect and process additional personal information on the basis of performing that contract.

We collect and process personal information to provide you with the Services and for our legitimate business needs and interests, including the management of our user relationships, the administration of our Services and business, and for legal compliance purposes, security purposes, or to maintain ongoing confidentiality, availability, and resilience of Pieces’s Services.

To the extent we process your personal information for other purposes, we ask for your consent in advance, which you may withdraw at any time by emailing us at privacy@pieces.app.

Sharing Your Personal Information

We may share personal information as discussed below:

Third Parties, Data Processors, and Agents. In some cases, Pieces uses third-party service providers as agents and/or data processors for the business purposes of helping us provide, improve, protect, and promote our Services. These third parties may access your personal information to perform tasks on our behalf. Examples include sending email, analyzing data, and providing user services. Personal information is collected and/or shared to the extent necessary to enable such processing. Unless we tell you otherwise, third parties, data processors, and agents do not have any right to use personal information we share with them beyond what is necessary to assist us.

Other Users. Our Services may display information like your name, profile picture, email address, and usage information to other users with whom you collaborate or choose to share.

Other Applications. You may choose to connect your Pieces account with certain third-party services––for example, via Pieces APIs. This enables Pieces and those third parties to exchange information about you and data in your account so that Pieces and those third parties can provide, improve, protect, and promote their services. Please note that third parties’ use of your information will be governed by their own privacy policies and terms of service.

Legal Purposes, Protection, and the Public Interest. Pieces may release personal information if required in response to a valid subpoena, court order, search warrant, a similar government order, or when we believe in good faith that disclosure is necessary to comply with our legal obligations; to enforce or apply our Terms of Use and other agreements; or protect the rights, property, safety, or security of Pieces, our employees, our users, third parties, or the public at large. This includes, without limitation, exchanging information with other companies and organizations for fraud protection.

Business Transfers. In some cases, Pieces may choose to buy or sell assets. In these types of transactions, personal information is typically one of the business assets that is transferred. If Pieces, or substantially all of its assets, were acquired, or in the event that Pieces goes out of business or enters bankruptcy, personal information would be one of the assets that is transferred or acquired by a third party. You acknowledge that such transfers may occur, and that any acquirer of Pieces may continue to use your personal information as set forth in this Privacy Policy.

Security of Personal Information

Security. Pieces endeavors to protect personal information. We have implemented reasonable measures designed to secure your personal information from accidental loss and from unauthorized access, use, alteration, and disclosure. We have a team that works to keep your information secure and tests for vulnerabilities. We may deploy automated technologies to detect abusive behavior and content that may harm our Services, you, or other users. Please note that the transmission of information via the internet is not completely secure, and Pieces cannot guarantee the security of personal information. Unauthorized entry or use, hardware or software failure, and other factors may compromise the security of personal information at any time. For additional information about the security measures Pieces uses in connection with our Services, please contact us at privacy@pieces.app.

User Controls. If your account requires a user password, you need to ensure that there is no unauthorized access to your account and personal information by selecting and protecting your password appropriately and limiting access to your computer and browser by signing off after you have finished accessing your account.

Retention of Information

When you sign up for an account with us, we’ll retain information you store on our Services for as long as your account exists or as long as we need it to provide you with the Services. Collected personal information will be retained for no longer than is necessary to fulfill the purposes for which it was collected, or as required by applicable laws or regulations. Server log information and email communications are generally retained for no longer than a period of one year.

Transfer of Information

To provide you with the Services, your personal information may be transferred, stored, or processed on servers outside of your home country. Where this is the case, we transfer information on the basis of mechanisms approved under applicable laws, and will take steps to make sure the right security measures are taken so that your privacy rights continue to be protected as outlined in this Privacy Policy. By submitting your personal information, you’re agreeing to this transfer, storing, and/or processing.

Your Control and Access of Your Data

You have control over your personal data and how it’s collected, used, and shared. If collection of personal information is based on your consent, you may withdraw your consent at any time by emailing us at privacy@pieces.app.

You may update or correct certain personal information at any time by contacting us at privacy@pieces.app or, in some cases, updating the information from your account page. When you update information, however, we may maintain a copy of the unrevised information in our records.

You can also email us at privacy@pieces.app if you would like to ask us to remove certain information you think is inaccurate.

Additional Rights as a Data Subject

Please be aware that you have the following additional rights with regards to your personal information:

Access to Personal Information. You may request a copy of the personal information that we hold about you. If you would like a copy of some or all of your personal information, please email us at privacy@pieces.app or write to us at the following address: Mesh Intelligent Technologies, Inc., 1311 Vine St., Cincinnati, OH 45202

Deletion/Erasure of Personal Information. Should you wish for Pieces to delete your personal information, please email us at privacy@pieces.app or write to us at the above mailing address. Please be aware that information may be retained despite a request for deletion in certain situations, including where processing is required for Pieces’s compliance with a legal obligation or for Pieces’s establishment, exercise, or defense of legal claims.

Restrict/Suspend/Object to Processing of Personal Information. In certain circumstances, you have the right to restrict or suspend processing of your personal information, as well as the right to object to the processing of your personal information. You also have the right to object to the processing of your personal information for direct marketing purposes.

Complaining to an Authority. If you feel that your personal information has been processed in a way that does not meet the requirements of the law, you may lodge a complaint with a relevant supervisory or regulatory authority.

Data Portability. When processing of personal information is based on consent or contract, and carried out by automated means, you have the right to receive the personal information which you have provided to Pieces in a structured, commonly used, and machine-readable format, and have the right to transmit that information to another data controller without delay.

Advertising and Marketing Through Third-Party Platforms

We may use your contact information, such as email addresses provided through newsletter subscriptions or account registration, to advertise our services to you on third-party platforms like Meta, Google, and similar advertising networks. This may include using your information for targeted advertising (e.g., custom audiences) on these platforms to promote relevant services that may interest you. You have the right to opt out of this type of advertising by contacting us at privacy@pieces.app.

Changes to Our Privacy Policy

We may revise this Privacy Policy from time to time and for any reason, and will post the most current version on our website. If a revision meaningfully reduces your rights, we will notify you. Users are bound by any changes to the Privacy Policy when the user accesses or uses our Services after such changes have been first posted.

Alternative Access to Privacy Policy

If you are a user with a disability, or an individual assisting a user with a disability, and need alternative access to this Privacy Policy, please contact us at privacy@pieces.app.

Contact

Have questions or concerns about Pieces, our Services, your personal information, our use and disclosure practices, or your consent choices? Contact us at privacy@pieces.app. You can write to us at: Mesh Intelligent Technologies, Inc., 1311 Vine St., Cincinnati, OH 45202

You also have the right to contact your local data protection supervisory authority.

California Residents – CCPA Notice and Other California Privacy Rights

This section applies only to California residents. It describes how Pieces collects, uses and shares personal information of California residents when we act as a “business” as defined under the California Consumer Privacy Act of 2018 (“CCPA”), as well as California residents’ rights with respect to their personal information. Any terms defined in the CCPA have the same meaning when used in this notice.

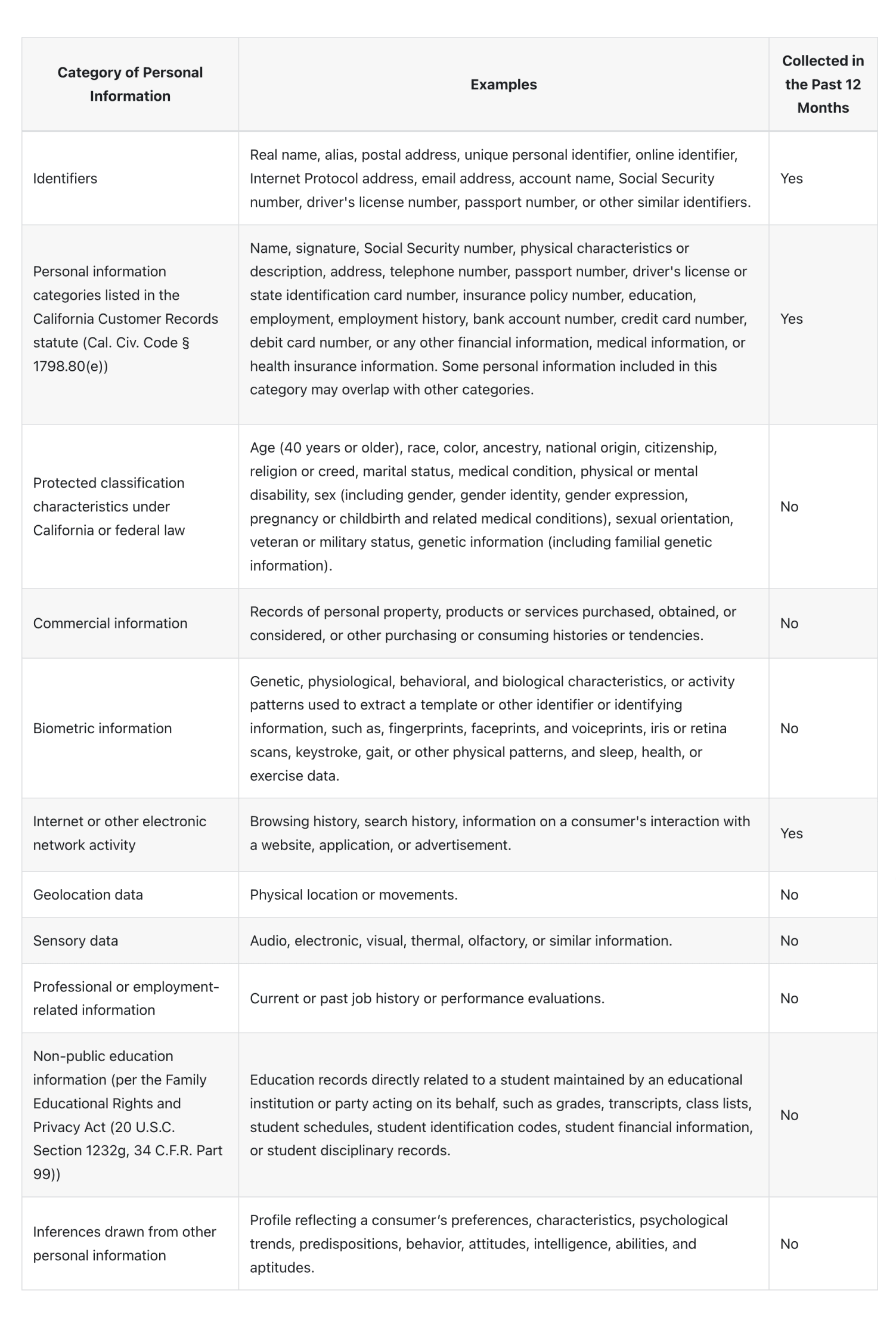

Personal Information We Collect

The chart below describes the personal information Pieces collects by reference to the categories specified in the CCPA, and describes our practices during the 12 months preceding the effective date of this Privacy Policy. Information you voluntarily provide to us, such as in free-form webforms, may contain other categories of personal information not described below. Each category is used for the purposes described in the How We Use Your Information section above and disclosed to the categories of third parties described in the Sharing Your Personal Information section above.

How We Obtain Personal Information

We obtain personal information directly and indirectly from activity on our websites and via your use of the Services.

Disclosures of Personal Information for a Business Purpose

In the preceding 12 months, Pieces has disclosed personal information for the following business purposes:

Performing services such as maintaining accounts, providing customer service, and fulfilling orders

In the preceding 12 months, the personal information disclosed by Pieces for a business purpose includes the following categories:

Identifiers

Personal information

Internet or other electronic network activity

We disclose your personal information for a business purpose to the following categories of third parties:

Service Providers

Sale of Personal Information

In the preceding 12 months, Pieces has not sold personal information.

Your California Rights

Right to Access Specific Information/Data Portability. You have the right to request that we disclose certain information to you about our collection and use of your personal information over the past 12 months. Once we receive and confirm your verifiable consumer request, we will disclose to you:

The categories of personal information Pieces has collected about you.

The categories of sources from which the personal information is collected.

The business or commercial purpose for collecting or selling your personal information.

The categories of third parties with whom we have shared your personal information.

The specific pieces of personal information we have collected about you.

If we sold or disclosed your personal information for a business purpose, two separate lists disclosing:

Sales, identifying the personal information categories that each category of recipient purchased

Disclosures for a business purpose, identifying the personal information categories that each category of recipient obtained.

Right to Deletion of Information. You have the right to request that we, and our service providers, delete the personal information we have collected from you. However, exceptions to this right to deletion include, but are not limited to, when the information is necessary for us or a third party to do any of the following:

Complete your transaction.

Provide you a good or service.

Perform a contract with you.

Detect or resolve security or functionality-related issues.

Safeguard the right to free speech.

Comply with the law or a legal obligation.

Carry out any actions for internal purposes that you might reasonably expect.

Make other internal and lawful uses of the information that are compatible with the context in which you provided it.

Right to Non-Discrimination. You have the right not to be discriminated against for exercising your CCPA rights. Unless permitted by law, we will not:

Deny you goods or services.

Charge you different prices or rates for goods or services, including through granting discounts or other benefits, or imposing penalties.

Provide you a different level or quality of goods or services.

Suggest that you may receive a different price or rate for goods or services or a different level or quality of goods or services.

Exercising Your California Access, Data Portability, and Privacy Rights

To exercise the access, data portability, and deletion rights described above, please submit a verifiable consumer request to us by emailing us at privacy@pieces.app or submitting our webform.

You may only make a verifiable consumer request for access or data portability twice within a 12-month period. Only you, or a person registered with the California Secretary of State authorized to act on your behalf, may make a verifiable consumer request related to your personal information.

The verifiable consumer request must:

Provide sufficient information that allows us to reasonably verify you are the person about whom we collected personal information or an authorized representative.

Describe your request with sufficient detail that allows us to properly understand, evaluate, and respond to it.

We cannot respond to your request or provide you with personal information if we cannot verify your identity or the authority to make the request and confirm that the personal information relates to you. We will only use personal information provided in a verifiable consumer request to verify the requestor’s identity or authority to make the request.

Making a verifiable consumer request does not require you to create an account with us. However, we do consider requests made through your password protected account sufficiently verified when the request relates to personal information associated with that specific account.

Response Timing and Format

We aim to respond to a consumer request for access, portability, or deletion within 45 days of receiving that request. If we require more time, we will inform you of the reason and extension period in writing. Any disclosures we provide will only cover the 12-month period preceding receipt of the verifiable consumer request. The response we provide will also explain the reasons we cannot comply with a request, if applicable. For data portability requests, we will select a format to provide your personal information that is readily useable and should allow you to transmit the information from one entity to another entity without hindrance.

If you have an account with us, we will deliver our written response to that account. If you do not have an account with us, we will deliver our written response by mail or electronically, at your option.

We do not charge a fee to process or respond to your verifiable consumer request unless it is excessive, repetitive, or manifestly unfounded. If we determine that a request is manifestly unfounded or excessive we may either:

Charge a reasonable fee reflecting administrative costs involved; or

Refuse to respond to the request and notify you of the reason.

Nevada Residents

Residents of Nevada have the right to opt out of the sale of certain personal information to persons who license or sell your personal information. Pieces does not currently sell Nevada residents’ personal information. Should we do so, we will update this section of the Privacy Policy. To exercise your opt-out right, contact us at privacy@pieces.app with the subject line "Nevada Do Not Sell Request" and include your name and the email address associated with your account.