Nano-Models: a recent breakthrough as the Pieces team brings LTM‑2.5 to life 🎉

Discover the latest breakthrough in AI with Nano-Models as the Pieces Team unveils LTM‑2.5. A game-changer for AI-powered development!

In the pursuit of building long-term Artificial Memory at the OS level, understanding when a user wants to retrieve information is just as crucial as what they want.

In the early days, every step of that retrieval pipeline, from intent classification through span extraction, normalization, enrichment, relevance scoring, formatting, and upload, ran through cloud-hosted LLMs.

That meant 8–11 preprocessing tasks before touching the memory store, another 2–4 post-processing tasks afterward, and finally a round-trip to a remote model to compose the answer.

The result?

Cumulative latency that drags time-to-first-token into the seconds, accuracy hurdles at each stage, user data exposed in transit, and token bills that balloon with every query.

Our breakthrough with LTM-2.5: two purpose-built on-device nano-models that offload temporal understanding entirely to local hardware — one for interpreting the user's temporal intent, the other for extracting the precise time span(s) implied by their language.

These specialized models are the result of extensive knowledge distillation from larger foundation models, quantized and pruned to run efficiently on consumer hardware.

Now, the entire 10–15 step pipeline lives on-device, preserving privacy, slashing costs, and taking the deterministic retrieval of long-term context down from seconds to milliseconds in latency.

When to leverage the temporal model

Our pipeline depends on two critical steps: determining intent first, then generating one or more time ranges representative of the user's natural-language query:

Use Case | Description |

|---|---|

Content Retrieval | Fetching past events ("What was I working on just now?") |

Action / Scheduling | Setting reminders or appointments ("Remind me in two hours") |

Future Information / Planning | Forecasting or "next week" inquiries ("What am I doing tomorrow afternoon?") |

Current Status | Real-time checks ("What am I doing right now?") |

Temporal – General | Ambiguous or loosely specified time references ("Show me last week around Friday evening") |

Non-Temporal | Queries without a time component ("Explain the concept of recursion.") |

Temporal range generation

Once we've determined that a query requires temporal memory access, we need to precisely identify when to search in the user's activity timeline.

This is where our second nano-model comes into play:

Range types and boundaries

The temporal span predictor handles several distinct types of time references:

Range Type | Example Query | Generated Span | Search Strategy |

|---|---|---|---|

Point-in-time | "Show me what I was doing at 2pm yesterday" | Single timestamp with narrow context window | Precise timestamp lookup with small buffer |

Explicit period | "What emails did I receive between Monday and Wednesday?" | Clearly defined start/end boundaries | Bounded range search with exact limits |

Implicit period | "What was I working on last week?" | Inferred start/end based on cultural/contextual norms | Automatically expanded to appropriate calendar boundaries |

Relative recent | "What was I just doing?" | Short window counting backward from current time | Recency-prioritized retrieval with adaptive timespan |

Fuzzy historical | "Show me that article I read about quantum computing last summer" | Broad date range with lower confidence boundaries | Expanded search space with relevance decay at boundaries |

Optimizing the temporal search space

The model doesn't just identify time boundaries — it also generates crucial metadata about search strategy:

Confidence scores for timespan boundaries (enabling better retrieval when dates are ambiguous)

Periodicity hints for recurring events (distinguishing

"my Monday meeting"from"last Monday's meeting")Time-zone awareness for properly interpreting references when users travel

Contextual weighting that prioritizes activity density over raw timestamps (e.g., for

"when I was working on the Smith project")

This specialized temporal range extraction eliminates the need to scan the entire memory corpus for each query, dramatically reducing both computational load and latency while improving retrieval precision.

Intention differentiation & edge cases

Ensuring we route queries correctly between retrieval and planning:

Retrieval vs. planning

"What was I working on just now?"→ Content Retrieval"What am I doing tomorrow afternoon?"→ Future Information / Planning

Broad vs. specific

"Show me last week around Friday evening"→ Content Retrieval with a loose span"Plan my weekend for next Friday evening"→ Future Information / Planning

Temporal vs. non-temporal

"What was the website I was just looking at?"→ Content Retrieval"Explain the concept of recursion."→ Non-Temporal (no memory lookup)

By clearly distinguishing temporal retrieval (pulling historical context) from temporal reference (scheduling or future-oriented intent), our on-device pipeline avoids misrouted cloud calls, cuts latency to the millisecond level, and maintains top-tier accuracy without sacrificing privacy or incurring hidden costs.

Examples & scenarios

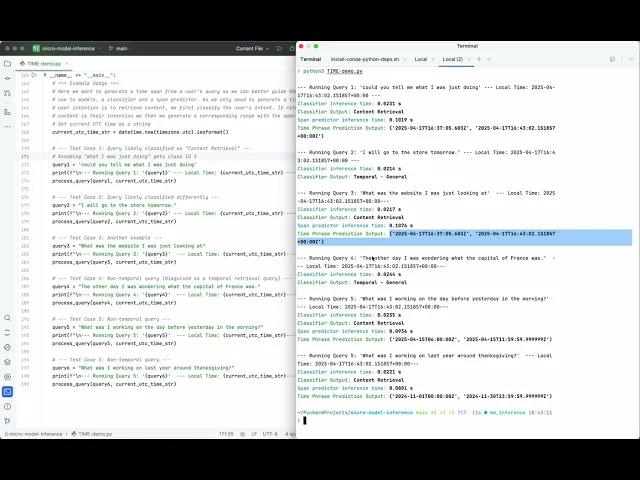

Below are representative user queries, each fed into our pipeline along with the user's local time in UTC (e.g. 2025-04-17T16:43:02.151857+00:00):

Recent Activity Retrieval

Query: "Could you tell me what I was just doing?"

Classifier (23 ms): Content Retrieval

Span Predictor (102 ms):

2025-04-17T16:37:05.603Z – 2025-04-17T16:43:02.151857ZShowcases: precise on-device extraction of the last few minutes of activity

Future Planning (Nuanced Task)

Query: "I will go to the store tomorrow."

Classifier (21 ms): Temporal – General

Span Predictor: N/A

Showcases: correctly not generating a past time-range for future intentions—an essential nuance

"Just" Retrieval Consistency

Query: "What was the website I was just looking at?"

Classifier (22 ms): Content Retrieval

Span Predictor (108 ms):

2025-04-17T16:37:05.603Z – 2025-04-17T16:43:02.151857ZShowcases: consistent span output across semantically similar "just" queries

Long-Range Historical Query

Query: "What was I working on last year around Thanksgiving?"

Classifier (22 ms): Content Retrieval

Span Predictor (88 ms):

2024-11-01T00:00:00Z – 2024-11-30T23:59:59.999999ZShowcases: broad date-range generation for loosely specified historical periods

Benchmarks

Tested on an Apple M1 Max (32 GB) under heavy load (30+ tabs, video, IDEs, messaging) to simulate real-world conditions:

Classification results

This table compares how well each model identifies the correct temporal intent label for a given sample.

Model Name | Accuracy | F1 (W) | Prec (W) | Recall (W) | Samples/Sec |

|---|---|---|---|---|---|

nano-temporal-intent (TIME Intent) | 0.9930 | 0.9930 | 0.9931 | 0.9930 | 544.41 |

gemini-1.5-flash-002 | 0.8241 | 0.8384 | 0.8834 | 0.8241 | 9.14 |

gpt-4o | 0.8634 | 0.8470 | 0.8698 | 0.8634 | 9.40 |

meta-llama/Llama-3.2-3B-Instruct | 0.4604 | 0.4094 | 0.4080 | 0.4604 | 92.43 |

Legend: Classification Models

nano-temporal-intent (TIME Intent): Our on-device nano-model for intent classification—ultra-lightweight and lightning-fast inference.

gemini-1.5-flash-002: Google's mid-tier large language model via API; good accuracy but higher latency and cost.

gpt-4o: OpenAI's flagship multimodal LLM; strong performance at premium compute and pricing.

meta-llama/Llama-3.2-3B-Instruct: A 3 billion-parameter open-weights LLM; lower accuracy but faster than cloud LLMs.

Legend: Classification Metrics

Accuracy: Proportion of samples for which the top-prediction matches the true class.

F1 (W), Prec (W), Recall (W): Weighted F1-score, precision, and recall across all intent classes (accounts for class imbalances).

Samples/Sec: Number of inference calls the model can process per second. (higher is better)

Span prediction results

This table measures how precisely each model extracts the correct time-span from text.

Model Name | E.C.O. Rate | Avg IoU | Exact Match | Samples/Sec |

|---|---|---|---|---|

nano-temporal-span-pred (TIME Range) | 0.9450 | 0.9201 | 0.8659 | 785.39 |

gemini-1.5-pro-002 | 0.2065 | 0.1865 | 0.1684 | 9.35 |

gpt-4o | 0.1767 | 0.1611 | 0.1535 | 9.47 |

meta-llama/Llama-3.2-3B-Instruct | 0.1725 | 0.1640 | 0.1517 | 62.02 |

Legend: Span Models

nano-temporal-span-pred (TIME Range): On-device span extractor optimized for low latency and high IoU.

gemini-1.5-pro-002, gpt-4o, meta-llama/Llama-3.2-3B-Instruct: LLMs & SLMs performing span extraction via API calls.

Legend: Span Metrics

E.C.O. Rate (Exact Coverage Overlap): Fraction of predicted spans that exactly match the gold span boundaries.

Avg IoU (Intersection-over-Union): Average overlap ratio between predicted and true spans.

Exact Match: Strict percentage of samples where predicted span text equals ground truth.

Samples/Sec: Span-prediction throughput on the benchmark hardware. (higher is better)

We observed SLMs running in the cloud on H100 GPU with vLLM incur $0.018 – $1.90 per run and took 15-25 min of compute time — our cascade delivers structured time-spans offline in milliseconds, with zero API cost and full data privacy.

Why it matters

🏗️ Architectural Specialization

Breaking monolithic LLMs into nano-models for classification vs. span prediction yields massive gains in both accuracy and speed.

🌐 Edge-First AI

Offline inference keeps sensitive data on-device — critical for medical, defense, and privacy-focused applications.

💡 Energy & Cost Efficiency

Eliminate token fees and slash compute budgets. This is the future of sustainable, scaled AI on laptops, wearables, and IoT.

🔬 Research Frontiers

Task-specific: distillation, quantization, and final pruning for modular pipelines

Adaptive orchestration: dynamic model selection based on compute availability

Hardware/software: co-design for ultra-efficient inference

Conclusion

This nano-temporal pipeline is one of approximately 11 nano-models we're weaving into LTM-2.5 to make long-term memory formation and retrieval across your entire OS blazingly fast, highly accurate, and privacy-first.

Innovation isn't about bigger models — it's about smarter, specialized models that deliver tangible benefits in real-world applications.

By focusing on modular, purpose-built AI systems that run entirely on-device, we're redefining what's possible for intelligent, responsive computing that respects user privacy while dramatically reducing cost and latency.

We can't wait to share more as we push the boundaries of on-device AI in the world of OS-level Long-Term Memory.

Lastly, I would be remiss if I didn't mention the obvious: none of this would be possible without the incredible creativity, dedication, and perseverance from the team behind Pieces.

I’ll close with a special shout out to our ML team and a extra special shout out to Antreas Antoniou and Sam Jones for believing in the approach and turning these first-principal theories into breakthroughs ✨