Most people don’t think about tokens, and honestly, should they?

Most people don’t think about tokens, and maybe they shouldn’t. But the way we use AI today has real implications for energy, cost, and the future of software. Here's why local-first AI matters.

Most people using AI tools today don’t think about tokens, and truthfully, there’s no reason they should. The idea that each word, each suggestion, each autocomplete is composed of tokens fragments of language processed by a large model is an abstraction that belongs more to machine learning research papers and engineering diagrams than to the lived experience of most users.

And yet, those tokens form the invisible infrastructure of how modern work increasingly happens. They are the units of AI’s power, the pieces of our productivity, and, perhaps most surprisingly, tiny carriers of energy and cost.

The experience of using AI has become so smooth, so embedded, and so frictionless that it’s easy to miss what’s really going on beneath the surface.

When you open a chatbot, paste in a list of notes, or ask it to rewrite your documentation, it doesn’t feel like you’re drawing power from a GPU in a distant data center. It feels like the machine is thinking. But in reality, those requests, whether simple or complex, rely on massive computational infrastructure that comes at an environmental and financial cost.

And while that cost may not seem significant at the level of an individual user, it scales in ways we’re only beginning to understand.

A moment worth pausing for

In this moment of AI ubiquity, it’s important to pause and ask ourselves what kind of infrastructure we are building, what kind of software we are normalizing, and what kind of practices we are reinforcing at scale. Because although it may not be obvious at first, the energy implications of AI tooling, especially in the cloud, are real, measurable, and growing.

According to the International Energy Agency, the global average for carbon intensity in electricity generation is around 400 grams of CO₂ per kilowatt-hour. A developer who relies on cloud-hosted AI models for even modest daily tasks may indirectly consume upwards of 3.65 kWh per year just through inference alone.

That equates to over 1.4 kilograms of CO₂ annually, per developer. While these numbers might not sound dramatic on their own, when multiplied across thousands or even millions of developers, the impact is difficult to ignore. The shift from traditional software to AI-assisted work doesn’t just change how tasks get done. It changes the physical energy footprint of every workflow.

The infrastructure we don’t see

Much of this energy usage is invisible. It doesn’t show up in battery life or browser performance.

Instead, it’s incurred off-device, in massive server farms that most users never see.

The amount of compute used to train deep learning models has increased 300,000x in 6 years. Figure taken from Green AI.

And therein lies the challenge: when cost and emissions are abstracted away, it becomes difficult to make informed choices about the tools we use and how we use them.

This is not a call to abandon AI, nor to return to simpler times, but rather an invitation to reimagine how intelligence gets delivered, one that is more aware, more efficient, and ultimately more empowering.

How local-first began as a performance mission

At Pieces, our work on local-first AI began not as an environmental initiative, but as a practical response to common frustrations we heard from developers, concerns about latency, reliability, privacy, and cloud dependence.

Our CEO, Tsavo Knott, has been focused on developer productivity since he began coding at age 14 – that ultimately shaped the foundation of Pieces itself. In many ways, the idea behind Pieces began as just that: pieces of code, carefully saved, reused, and repurposed to help developers move faster and think less about repetition.

As we built the core capabilities of our tool, we made a series of architectural decisions that centered around autonomy and speed: running inference directly on the device, avoiding unnecessary cloud calls, and investing in smaller, faster, task-specific models that could operate efficiently in low-resource environments – nano models.

Over time, it became clear that these decisions had another, deeper benefit. They weren’t just making our tools more responsive or more secure, they were making them more sustainable. A nano model that runs on-device in a few milliseconds doesn’t just save API calls; it saves electricity.

It removes the need for energy-intensive GPUs to spin up in remote data centers. It avoids the data transfer and carbon overhead of every prompt and completion. In short, it lightens the load, both technically and environmentally.

Building for efficiency

What we’ve learned is that thoughtful model design, when paired with the right infrastructure, can change the economics and energy profile of AI-assisted work.

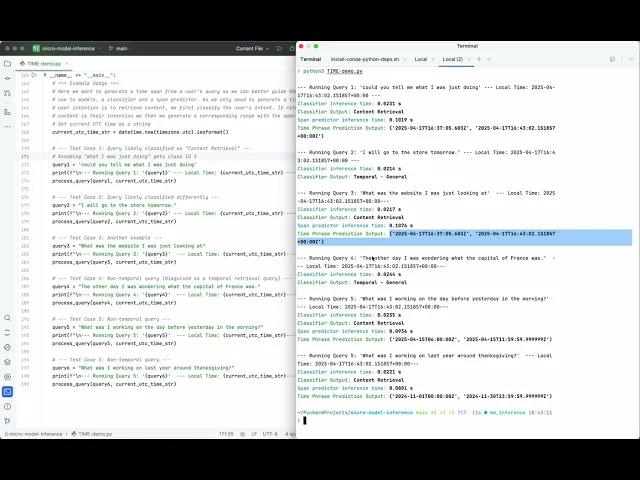

Instead of calling a 70-billion parameter cloud model for every task, we began routing simpler, repetitive actions through models with fewer than 100 million parameters.

These nano models, trained and fine-tuned to handle very specific responsibilities such as tagging content, linking context, or classifying user intent, operate at a fraction of the energy cost.

Internal benchmarks, supported by studies like those from MIT SuperCloud and GreenAI, show a range of energy consumption from 1 millijoule per token (nano) to 3-4 joules per token (cloud LLMs). That’s a difference of several orders of magnitude and it’s one that users never need to notice in terms of functionality, but one that matters immensely in aggregate.

What context-aware AI can do (without the Cloud)

It’s also closely tied to the expansion of our intelligent context systems, particularly Pieces MCP which orchestrates how information is processed and surfaced across your workspace.

By leaning on local inference chains, built from cooperating nano and small language models (you can check the full compatible list here), MCP allows us to deliver high-context AI assistance without repeatedly reaching into the cloud.

This not only improves performance and privacy, but dramatically reduces energy overhead, since even long-form understanding and cross-note reasoning can now happen locally.

A balanced view of intelligence and infrastructure

This kind of architectural shift is not just about optimization. It’s about reclaiming agency over how AI is used in the tools we build and the tools we depend on. It’s about challenging the assumption that intelligence must be centralized, abstracted, and energy-intensive.

And it’s about recognizing that small changes in infrastructure can lead to meaningful outcomes, not only for individuals and teams, but for the broader systems we all inhabit.

We’re not here to pretend that local-first AI solves every problem, or that smaller models are always better (even though some companies shift to them). Some tasks will always require heavier computation, richer context, or more sophisticated reasoning.

But what we’re advocating for is balance: a model of development where scale is intentional, not automatic; where cost is transparent, not hidden; and where performance is matched by principle.

The role of AI companies in a time of scale

We know there’s irony in an AI company writing about the environmental cost of AI. But that tension is exactly why we believe this conversation is worth having.

We live in a moment where intelligent systems are reshaping the way work happens, the way software is built, and the way problems are solved. And with that transformation comes a responsibility to ask harder questions, not only about what AI can do, but about how it does it.

Designing a lighter future, one token at a time

The good news is that sustainable AI doesn’t have to feel like a compromise.

In fact, the opposite is true. When you design for local-first execution, you get faster tools. When you train and fine-tune smaller models, you get greater efficiency and specificity.

When you minimize cloud dependency, you get stronger privacy and better offline support. And when you do all of that with measurement and transparency, you get trust, not just from users, but from every stakeholder who depends on your software.

At the end of the day, this isn’t just about saving a few watts or avoiding API bills.

It’s about building a better relationship between intelligence and infrastructure. A relationship where performance, privacy, and sustainability reinforce each other, not trade off against each other.

The tools we build reflect the values we carry forward. And in a world that’s becoming more intelligent by the day, the question is not whether we will use AI. but whether we will use it wisely, responsibly, and with awareness of the systems we shape in the process.

References

Schwartz et al., “GreenAI” (2019): https://arxiv.org/abs/1907.10597

Power-Hungry Processing (2023): https://arxiv.org/abs/2311.16863

International Energy Agency: https://www.iea.org/reports/electricity-market-report

U.S. Energy Information Administration: https://www.eia.gov/electricity/monthly/

OpenAI GPT-4o Pricing:https://openai.com/api/pricing